Thursday, December 13, 2018

AWS RoboMaker: Robot Operating System (ROS), with connectivity to cloud services.

https://aws.amazon.com/robomaker/

AWS RoboMaker

Easily develop, test, and deploy intelligent robotics applications

AWS RoboMaker is a service that makes it easy to develop, test, and deploy intelligent robotics applications at scale. RoboMaker extends the most widely used open-source robotics software framework, Robot Operating System (ROS), with connectivity to cloud services. This includes AWS machine learning services, monitoring services, and analytics services that enable a robot to stream data, navigate, communicate, comprehend, and learn. RoboMaker provides a robotics development environment for application development, a robotics simulation service to accelerate application testing, and a robotics fleet management service for remote application deployment, update, and management.

Robots are machines that sense, compute, and take action. Robots need instructions to accomplish tasks, and these instructions come in the form of applications that developers code to determine how the robot will behave. Receiving and processing sensor data, controlling actuators for movement, and performing a specific task are all functions that are typically automated by these intelligent robotics applications. Intelligent robots are being increasingly used in warehouses to distribute inventory, in homes to carry out tedious housework, and in retail stores to provide customer service. Robotics applications use machine learning in order to perform more complex tasks like recognizing an object or face, having a conversation with a person, following a spoken command, or navigating autonomously. Until now, developing, testing, and deploying intelligent robotics applications was difficult and time consuming. Building intelligent robotics functionality using machine learning is complex and requires specialized skills. Setting up a development environment can take each developer days and building a realistic simulation system to test an application can take months due to the underlying infrastructure needed. Once an application has been developed and tested, a developer needs to build a deployment system to deploy the application into the robot and later update the application while the robot is in use.

AWS RoboMaker provides the tools to make building intelligent robotics applications more accessible, a fully managed simulation service for quick and easy testing, and a deployment service for lifecycle management. AWS RoboMaker removes the heavy lifting from each step of robotics development so you can focus on creating innovative robotics applications.

What is AWS RoboMaker?

How it works

AWS RoboMaker provides four core capabilities for developing, testing, and deploying intelligent robotics applications.

Cloud Extensions for ROS

Robot Operating System, or ROS, is the most widely used open source robotics software framework, providing software libraries that help you build robotics applications. AWS RoboMaker provides cloud extensions for ROS so that you can offload to the cloud the more resource-intensive computing processes that are typically required for intelligent robotics applications and free up local compute resources. These extensions make it easy to integrate with AWS services like Amazon Kinesis Video Streams for video streaming, Amazon Rekognition for image and video analysis, Amazon Lex for speech recognition, Amazon Polly for speech generation, and Amazon CloudWatch for logging and monitoring. RoboMaker provides each of these cloud service extensions as open source ROS packages, so you can build functions on your robot by taking advantage of cloud APIs, all in a familiar software framework.

Development Environment

AWS RoboMaker provides a robotics development environment for building and editing robotics applications. The RoboMaker development environment is based on AWS Cloud9, so you can launch a dedicated workspace to edit, run, and debug robotics application code. RoboMaker's development environment includes the operating system, development software, and ROS automatically downloaded, compiled, and configured. Plus, RoboMaker cloud extensions and sample robotics applications are pre-integrated in the environment, so you can get started in minutes.

Simulation

Simulation is used to understand how robotics applications will act in complex or changing environments, so you don’t have to invest in expensive hardware and set up of physical testing environments. Instead, you can use simulation for testing and fine-tuning robotics applications before deploying to physical hardware. AWS RoboMaker provides a fully managed robotics simulation service that supports large scale and parallel simulations, and automatically scales the underlying infrastructure based on the complexity of the simulation. RoboMaker also provides pre-built virtual 3D worlds such as indoor rooms, retail stores, and race tracks so you can download, modify, and use these worlds in your simulations, making it quick and easy to get started.

Fleet Management

Once an application has been developed or modified, you’d build an over-the-air (OTA) system to securely deploy the application into the robot and later update the application while the robot is in use. AWS RoboMaker provides a fleet management service that has robot registry, security, and fault-tolerance built-in so that you can deploy, perform OTA updates, and manage your robotics applications throughout the lifecycle of your robots. You can use RoboMaker fleet management to group your robots and update them accordingly with bug fixes or new features, all with a few clicks in the console.

Benefits

Get started quickly

AWS RoboMaker includes sample robotics applications to help you get started quickly. These provide the starting point for the voice command, recognition, monitoring, and fleet management capabilities that are typically required for intelligent robotics applications. Sample applications come with robotics application code (instructions for the functionality of your robot) and simulation application code (defining the environment in which your simulations will run). The sample simulation applications come with pre-built worlds such as indoor rooms, retail stores, and racing tracks so you can get started in minutes. You can modify and build on the code of the robotics application or simulation application in the development environment or use your own custom applications.

Build intelligent robots

Because AWS RoboMaker is pre-integrated with popular AWS analytics, machine learning, and monitoring services, it’s easy to add functions like video streaming, face and object recognition, voice command and response, or metrics and logs collection to your robotics application. RoboMaker provides extensions for cloud services like Amazon Kinesis (video stream), Amazon Rekognition (image and video analysis), Amazon Lex (speech recognition), Amazon Polly (speech generation), and Amazon CloudWatch (logging and monitoring) to developers who are using Robot Operating System, or ROS. These services are exposed as ROS packages so that you can easily use them to build intelligent functions into your robotics applications without having to learn a new framework or programming language.

Lifecycle management

Manage the lifecycle of a robotics application from building and deploying the application, to monitoring and updating an entire fleet of robots. Using AWS RoboMaker fleet management, you can deploy an application to a fleet of robots. Using the CloudWatch metrics and logs extension for ROS, you can monitor these robots throughout their lifecycle to understand CPU, speed, memory, battery, and more. When a robot needs an update, you can use RoboMaker simulation for regression testing before deploying the fix or new feature through RoboMaker fleet management.

Friday, December 7, 2018

Wednesday, October 24, 2018

Sunday, October 14, 2018

Thursday, October 11, 2018

Fwd: OpenMV News

From: "OpenMV" <openmv@openmv.io>

Date: Oct 10, 2018 11:53 PM

Subject: OpenMV News

To: "John" <john.sokol@gmail.com>

Cc:

|

Tuesday, October 9, 2018

Monday, September 24, 2018

Goertzel filter

https://en.wikipedia.org/wiki/Goertzel_algorithm

Many applications require the detection of a few discrete sinusoids. The Goertzel filter is an IIR filter that uses the feedback to generate a very high Q bandpass filter where the coefficients are easily generated from the required centre frequency, according to the following equations. The most common configuration for using this technique is to measure the signal energy before and after the filter and to compare the two. If the energies are similar then the input signal is centred in the pass-band, if the output energy is significantly lower than the input energy then the signal is outside the pass band. The Goertzel algorithm is most commonly implemented as a second order recursive IIR filter, as shown below.

function Xk = goertzel_non_integer_k(x,k)

% Computes an N-point DFT coefficient for a

% real-valued input sequence 'x' where the center

% frequency of the DFT bin is k/N radians/sample.

% N is the length of the input sequence 'x'.

% Positive-valued frequency index 'k' need not be

% an integer but must be in the range of 0 –to- N-1.

% [Richard Lyons, Oct. 2013]

N = length(x);

Alpha = 2*pi*k/N;

Beta = 2*pi*k*(N-1)/N;

% Precompute network coefficients

Two_cos_Alpha = 2*cos(Alpha);

a = cos(Beta);

b = -sin(Beta);

c = sin(Alpha)*sin(Beta) -cos(Alpha)*cos(Beta);

d = sin(2*pi*k);

% Init. delay line contents

w1 = 0;

w2 = 0;

for n = 1:N % Start the N-sample feedback looping

w0 = x(n) + Two_cos_Alpha*w1 -w2;

% Delay line data shifting

w2 = w1;

w1 = w0;

end

Xk = w1*a + w2*c + j*(w1*b +w2*d);

Quiet Beacon

Saturday, September 22, 2018

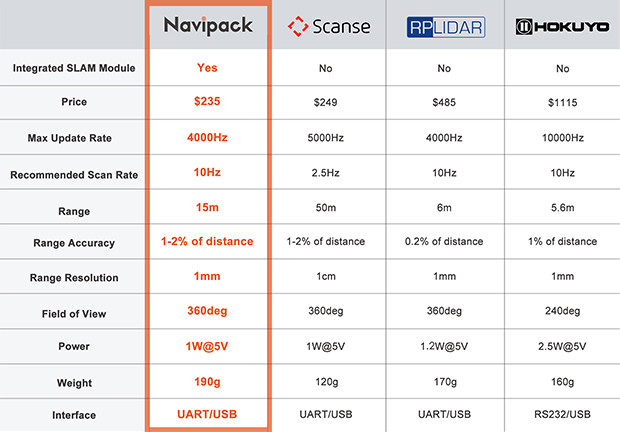

NaviPack LiDAR Navigation Module

https://www.indiegogo.com/projects/navipack-lidar-navigation-module-reinvented#/

https://www.youtube.com/watch?v=SBhIdXVnoZU&feature=share

https://robot.imscv.com/en/product/3D%20LIDAR

NaviPack makes any device smarter and easier to control. It uses the latest LiDAR technology and powerful APIs to create easy solutions for automated devices.

Wednesday, September 12, 2018

Keypoints in computer vision - OpenCV3 techniques

OpenCV3 - Keypoints in Computer Vision by Dr. Adrian Kaehler, Ph.D.

https://www.youtube.com/watch?v=tjuaZGvlBh4

Learning OpenCV 3

Computer Vision in C++ with the OpenCV Libraryhttps://covers.oreillystatic.com/images/0636920044765/lrg.jpg

https://en.wikipedia.org/wiki/Adrian_Kaehler

Another good talk from him,

Future Talk #91 - Machine Vision, Deep Learning and Robotics

https://www.youtube.com/watch?v=kPq4lYGr7rEA discussion of machine vision, deep learning and robotics with Adrian Kaehler, founder and CEO of Giant.AI and founder of the Silicon Valley Deep Learning Group

Feynman's technique

So, what the hell is this Leibniz Integral Rule? In its guts, it's about figuring out how the result of a definite integral changes when the limits of integration or the integrand itself depends on some other variable, let's call it α.

Imagine you've got an integral that looks like this:

Here's the goddamn magic: the Leibniz Integral Rule tells us how to find the derivative of this whole shebang with respect to α, i.e., dαdI. And it's a beautiful piece of calculus that lets you swap the order of differentiation and integration under certain conditions.

The rule states that:

Let's break down this glorious mess piece by goddamn piece:

-

∂α∂f(x,α): This is the partial derivative of the integrand f(x,α) with respect to the parameter α, treating x as a constant. This term accounts for how the integrand itself changes as α varies.

-

f(b(α),α)⋅dαdb: This part deals with the upper limit of integration, b(α), which can also be a function of α. We evaluate the integrand at the upper limit and multiply it by the derivative of the upper limit with respect to α. This tells us how the integral changes because the upper boundary is moving.

-

f(a(α),α)⋅dαda: Similarly, this handles the lower limit of integration, a(α), which can also depend on α. We evaluate the integrand at the lower limit and multiply it by the derivative of the lower limit with respect to α. This tells us how the integral changes because the lower boundary is moving. Note the minus sign here – it's crucial because the lower limit is the "start" of the integration.

When the Limits are Constant:

Now, here's where it gets particularly slick for solving those stubborn integrals. If the limits of integration a and b are constants (i.e., they don't depend on α), then dαda=0 and dαdb=0. In this case, the Leibniz Integral Rule simplifies to the much cleaner form:

This is the damn magic that Feynman loved. You introduce a parameter α into your integrand in a clever way, differentiate under the integral sign with respect to α, solve the resulting (hopefully easier) integral, and then, in the end, you evaluate your result at a specific value of α that corresponds to your original integral.

The Power:

The power of this technique lies in its ability to transform a difficult integral with respect to x into a potentially simpler integral with respect to x after differentiating with respect to a parameter α. Often, this differentiation with respect to α can bring down powers of x or introduce terms that make the integration with respect to x much more manageable. After you've integrated with respect to x, you're left with a function of α, which you then need to integrate with respect to α to get back to your original integral (plus a constant of integration that you might need to determine using a known value of the original integral for a specific α).

Conditions for Validity (Don't Be a Goddamn Fool):

Like any powerful tool, the Leibniz Integral Rule comes with some conditions you can't just ignore:

-

Continuity of f(x,α): The function f(x,α) must be continuous with respect to both x and α on the region of integration.

-

Continuity of ∂α∂f(x,α): The partial derivative of f with respect to α must also be continuous on the region of integration.

-

Differentiability of Limits: If the limits of integration a(α) and b(α) depend on α, they must be differentiable with respect to α.

If these conditions are met, then you're goddamn golden to swap the order of differentiation and integration.

Why the Obscurity?

As Feynman pointed out, it's a damn shame this technique isn't emphasized more. Maybe it's because it requires a bit of ingenuity in introducing the parameter α. It's not a plug-and-chug method like some basic integration rules. It demands a bit of creative thinking, a sense of how a parameter might simplify the integrand. But when it works, it works like a goddamn charm, turning integrals that would make seasoned mathematicians sweat into elegant solutions.

So, the Leibniz Integral Rule, or "Feynman's technique" (the non-quantum kind, you hear me?), is a powerful and often overlooked tool in the integrator's arsenal. It's about understanding how integrals behave when their guts change, and it can be the goddamn key to unlocking some of the toughest mathematical puzzles out there. Don't you forget it.

Complex numbers, Quaternions and Octonions

https://en.wikipedia.org/wiki/Real_number 2^0 = 1 Dimention

https://en.wikipedia.org/wiki/Complex_number 2^1 = 2 Dimentions

https://en.wikipedia.org/wiki/Quaternion 2^2 = 4 Dimentions

https://en.wikipedia.org/wiki/Octonion 2^3 = 8 Dimentions

https://en.wikipedia.org/wiki/Sedenion 2^4 = 16 Dimentions

Trigintaduonions 2^5 = 32 Dimensions

- From

R toC you gain "algebraic-closure"-ness (but you throw away ordering). - From

C toH we throw away commutativity. - From

H toO we throw away associativity. - From

O toS we throw away multiplicative normedness.

- Euler Angles

- Angle Axis

- Rotation matrix

- Quaternions

- 9 scalars, more complex regularization

- Concatenation: 27 multiplications

- Rotating a vector: 9 multiplications

- 4 scalars, easy regularization

- Concatenation: 16 multiplications

- Rotating a vector: 18 multiplications

Quaternions and spatial rotation - Wikipedia

William Hamilton invented quaternions in 1843 as a method to allow him to multiply and divide vectors, rotating and stretching them.

$\tilde{Q} = q_w + q_x i + q_y j + q_z k$

OpenSCAD

Singularities

A Gyro-Free Quaternion based Attitude Determination system suitable or implementation using low cost sensors.

Videos

https://www.youtube.com/watch?v=dul0mui292Q Math for Game Developers - Perspective Matrix Part 2

https://www.youtube.com/watch?v=8gST0He4sdE Hand Calculation of Quaternion Rotation

https://www.youtube.com/watch?v=0_XoZc-A1HU FamousMathProbs13b: The rotation problem and Hamilton's discovery of quaternions (II)